Stable Diffusion 3, the next generation of the popular open source AI image generation model, has been unveiled by StabilityAI and is an impressive advancement.

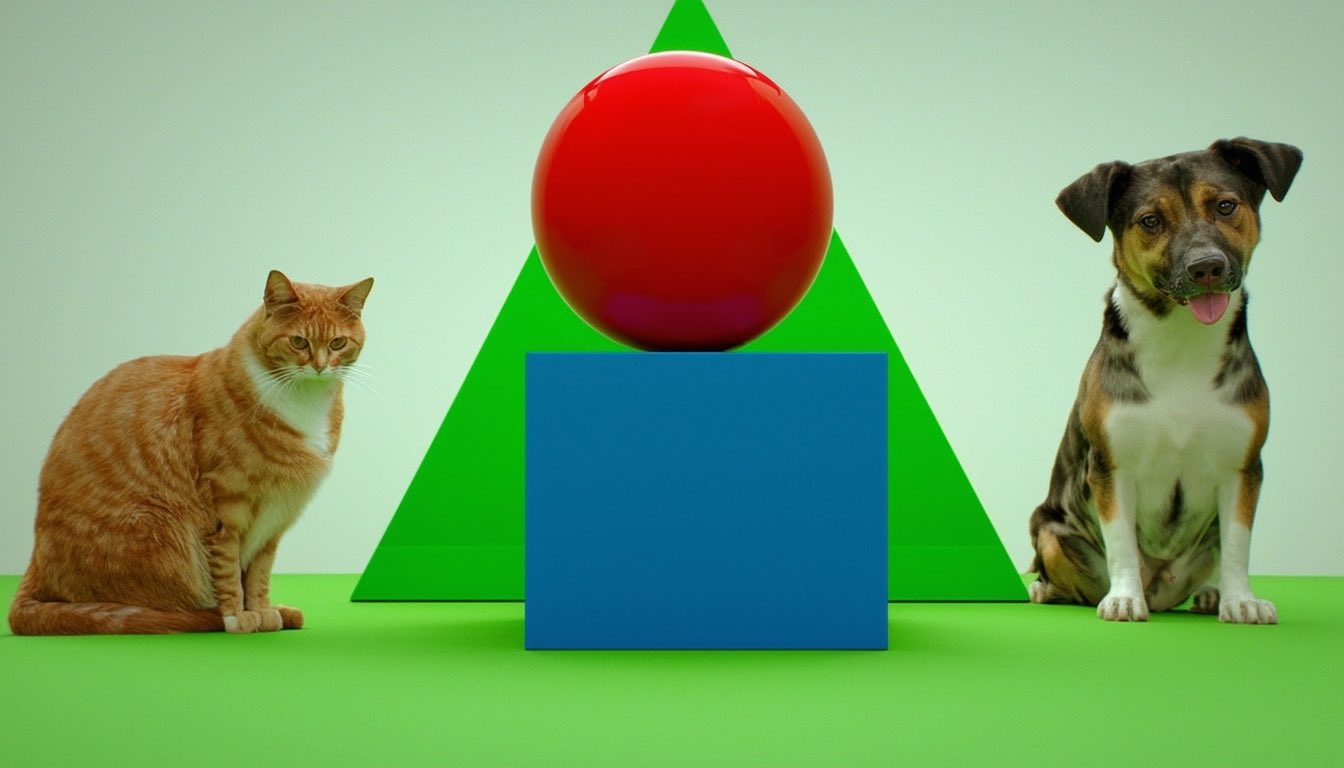

Details of the new model have been revealed alongside a series of images and prompts showing that it is capable of following complex instructions and creating hyper-realistic images.

This early preview of the model is only available to a select group of testers as StabilityAI collects feedback to improve performance and security ahead of a public release.

StabilityAI also used the “Do Not Train” spawning registry to ensure that images from artists who did not want their work to be used to train AI were excluded. Before training, over 1.5 billion images were filtered from the dataset.

What is Stable Diffusion 3?

We are announcing Stable Diffusion 3, our most powerful text-to-image model, leveraging a diffusion transformer architecture for significantly improved multi-topic prompt performance, image quality, and spelling capabilities. Today we open the waiting list for an early preview. This phase… pic.twitter.com/FRn4ofC57sFebruary 22, 2024

Unlike DALL-E, MidJourney or Google’s Imagen Stable Diffusion is an open model that can be integrated into other platforms or even run locally if you have enough computing power.

SD3 will include a range of models ranging from 800 million to eight billion parameters, enabling different levels of quality and operation on a wide range of hardware devices.

Like OpenAI’s Sora Stable Diffusion 3, it combines diffusion model technology with transformer architecture, which could explain the improved instruction sequencing capabilities.

It also uses flow matching, a mathematical technique for training diffusion models, and involves measuring the difference between the real images and the generated images at different stages of the process.

What can Stable Diffusion 3 do?

So far, only a few people outside of the development team have had direct access to Stable Diffusion 3 and the research paper has yet to be published. So what we know about his abilities are what the team has said and the results they have shared.

From what I can see of the images so far, this is a significant step towards generative images. Along with OpenAI’s Sora, it’s an indication of a major improvement in how generative AI works and how well it works.

It appears to produce consistent, expanded and readable text on images, solves the issues surrounding human anatomy including fingers, and captures colors well.

Emad Mostaque, founder of StabilityAI, said that StabilityAI has 100 times fewer resources for training AI models than companies like OpenAI, but still does impressive work. He suggested that like Sora, SD3 will be able to accept a range of inputs, including video and image.

Details about SD3 come a few days after StabilityAI also unveiled Stable Cascade, a new imaging technique that Mostaque said will work with SD3 in the future.